Virtual Co-Locator

customized to suit different settings and topics

Background

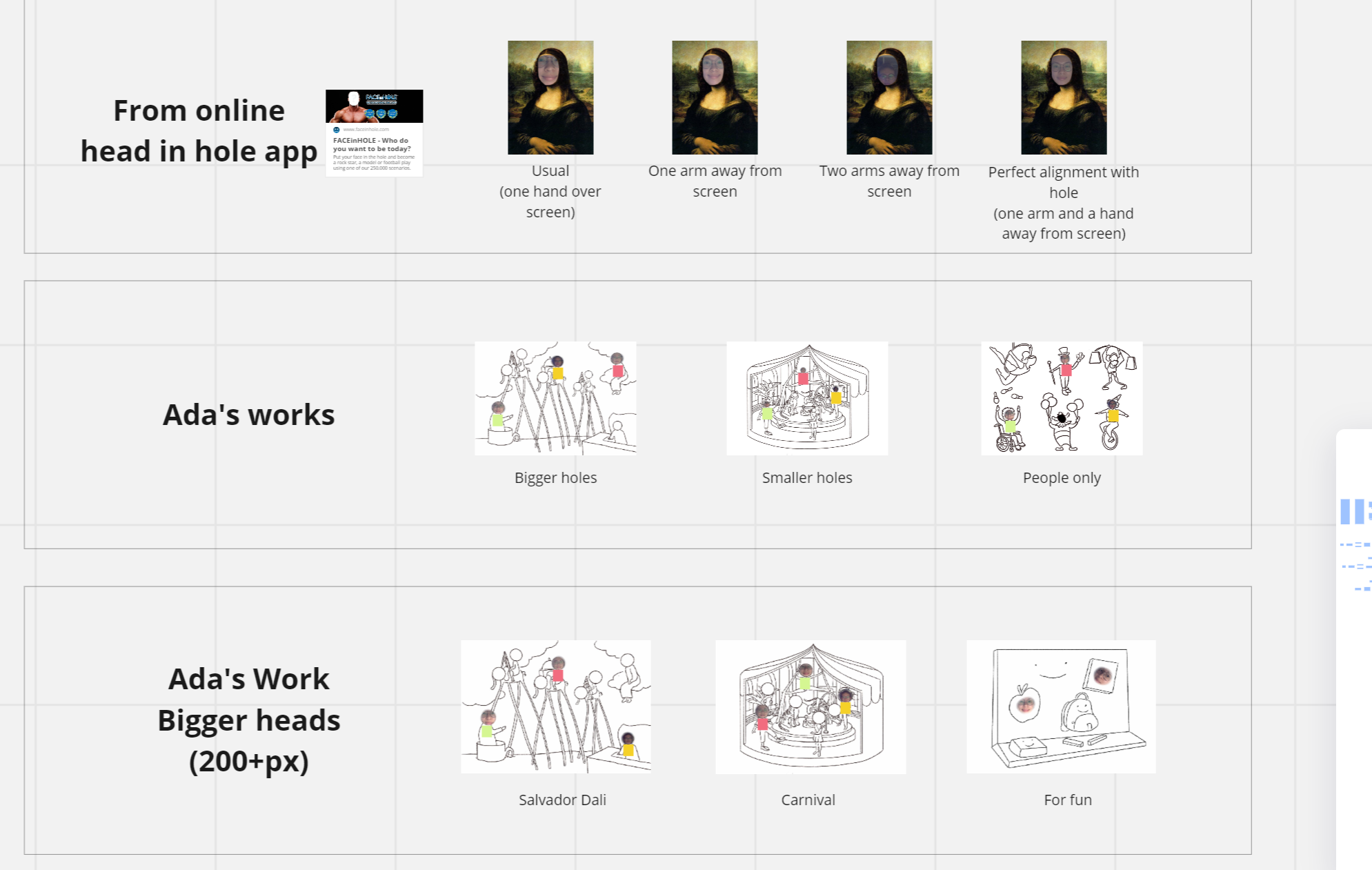

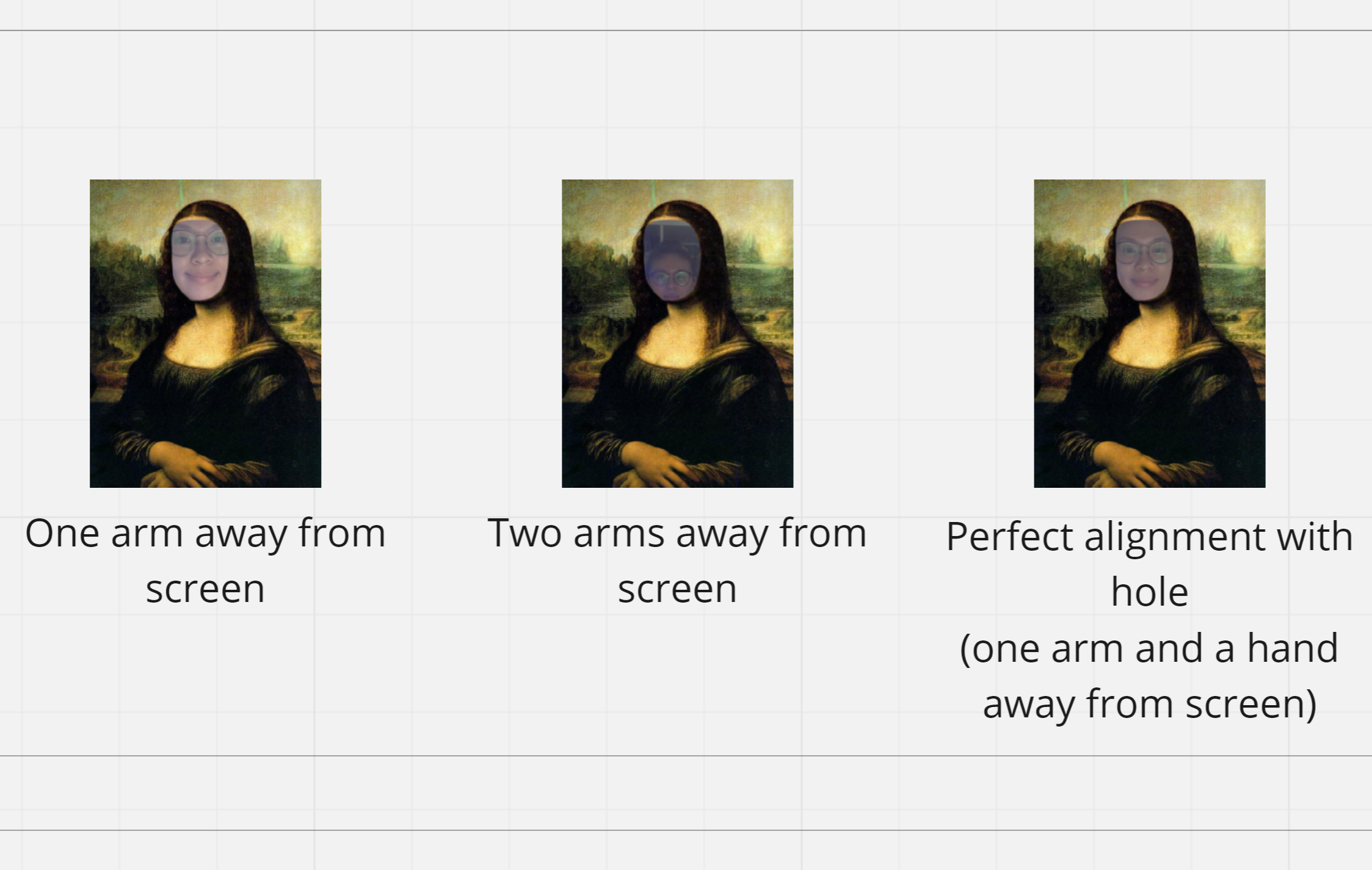

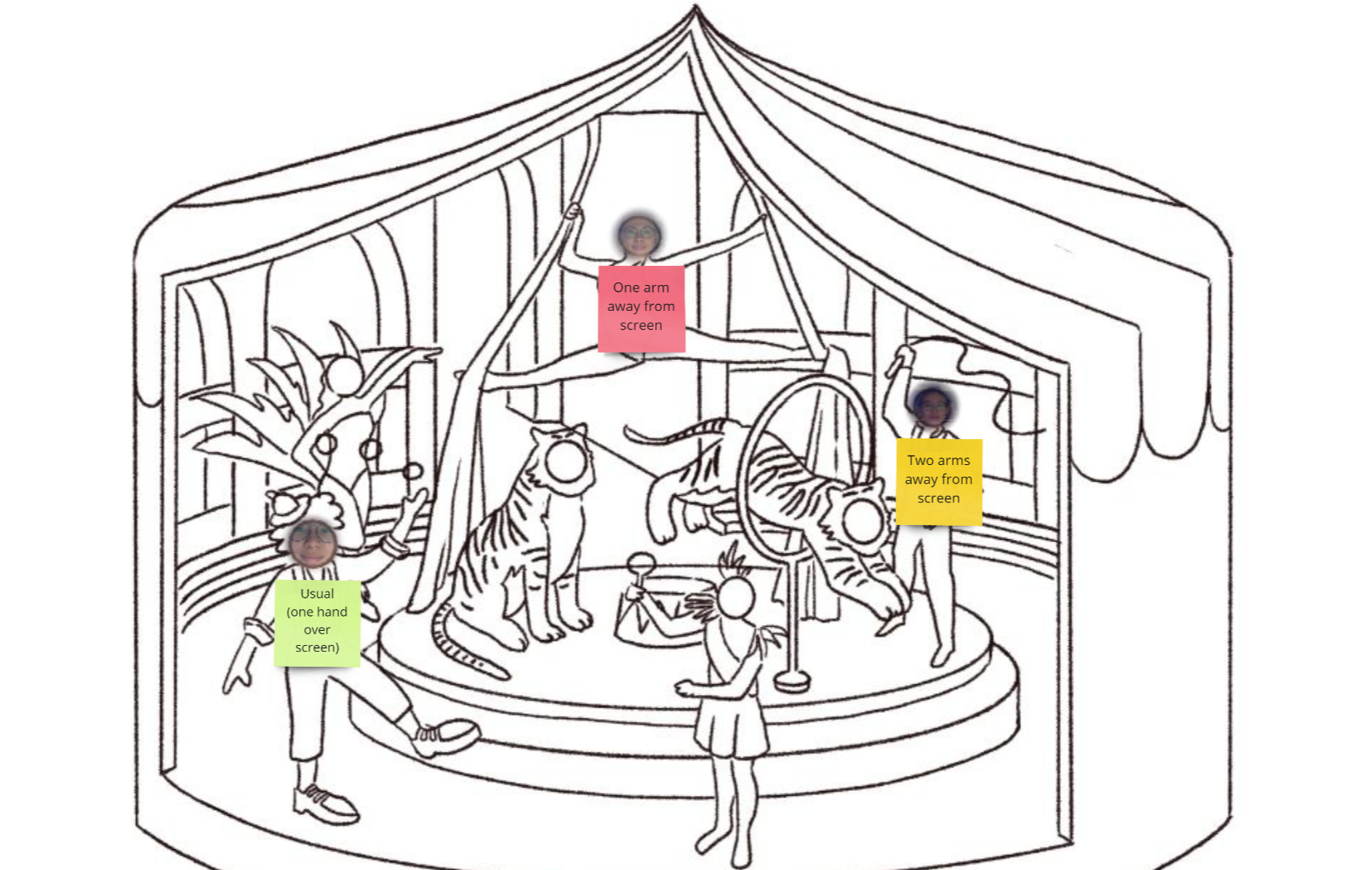

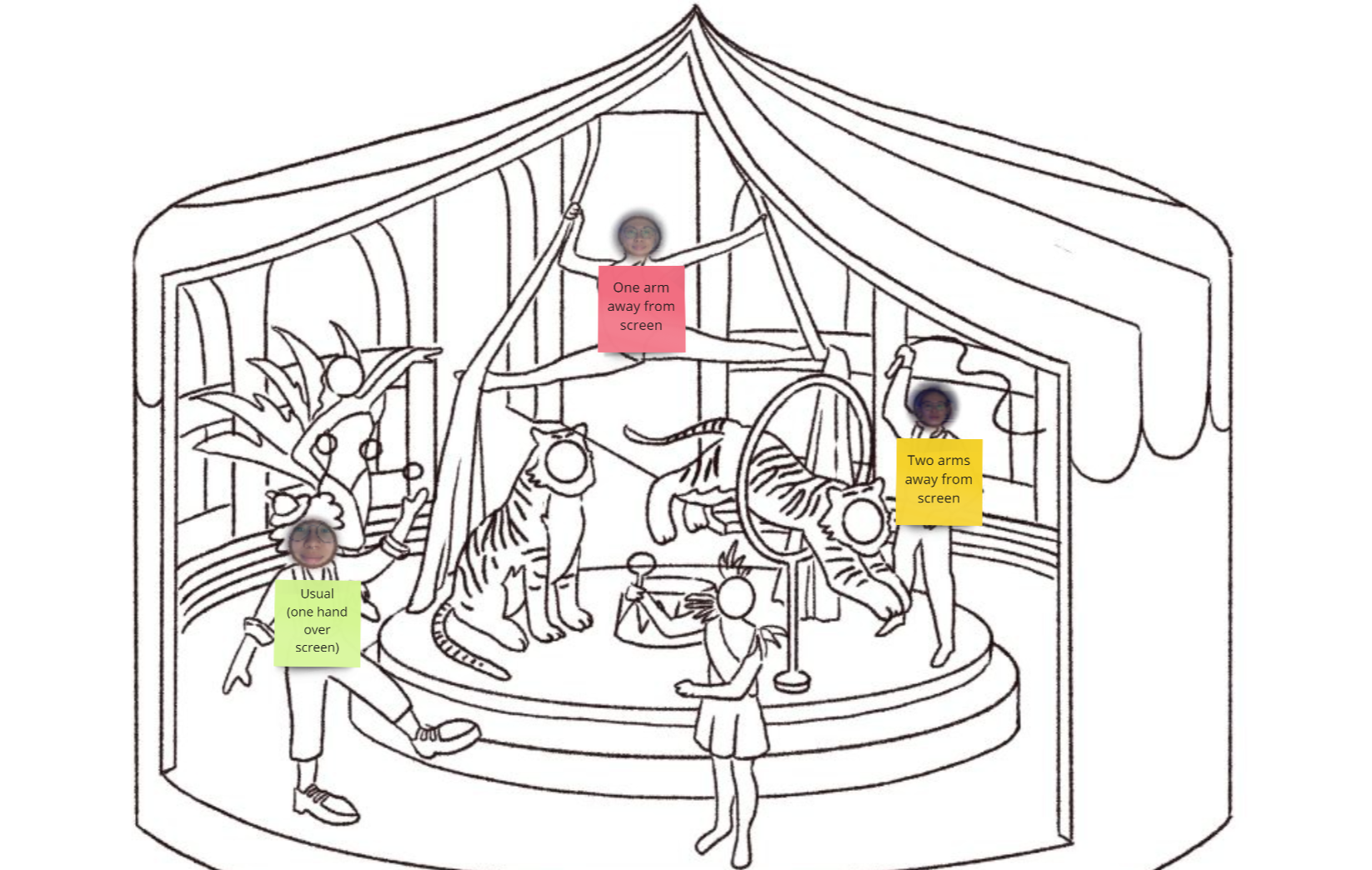

Video calls have limited features that don’t create the same professional atmosphere and interactive environments that exist in meetings in person. The past year of remote offices and meetings has made one thing clear: there exists a massive opportunity for software developers to elevate the online working experience. Virtual Co-Locator aims to address this gap by creating an alternative video calling platform that seeks to include more features and effects such as facial detection and creative backgrounds to allow video meetings to be customized to suit different settings and topics. For example, for calls that involve round table discussions, Virtual Co-Locator is working on leveraging facial detection technology to superimpose attendees’ videos in a shared virtual background that would look like a round table office environment. The basic idea is to make video meetings more interactive and create a virtual environment that enhances the traditional video calling experience.

Overview

The first stage of this project involved developing a server using Node.js, an open-source JavaScript runtime environment. Then, the team implemented peer-to-peer (P2P) technology using WebRTC, which allows for a decentralized way for computers to connect with each other. The peer-to-peer technology works by receiving a message from one client called the watcher, and another client called the broadcaster. Once the watcher and broadcaster are registered in the server, the server makes a direct connection between the watcher client and the broadcaster client, allowing for direct communication between the two computers and facilitating the transmission of audio and video streams.

To put this in a classroom context, a professor could join the watcher client of this server and have the students join the broadcaster client for the same session and host a virtual classroom call. The advantage of using a peer-to-peer network is that it is a decentralized way for computers to connect with each other and greatly diminishes the load on the server, as once the computers have been connected, the server does not have to process sending audio and video streams between computers.

Currently, the team at EML is working on implementing facial recognition models on the first iteration of the Virtual Co-Locator web application. The team is gathering premade facial recognition models from TensorFlow, an open-source machine learning library.

By the end of this term (Summer 2021), the team hopes to provide a publicly accessible Node.js server that does virtual co-location of four people in a background of their choosing. The eventual goal is to iterate upon this and create a Virtual Co-Locator web application which would be an interactive alternative to conventional video calling platforms by allowing for more features and virtual environment simulations.

Some examples of different meeting environments that can be implemented in Virtual Co-Locator include coffee shops, studios, classroom halls, seminars, roundtables, and keynote speeches.

The Team

Principal Investigator(s)

- Patrick Pennefather

EML Staff

- Dante Cerron

Students

- Elyse Wall

- Olivia Chen

- Ada Tam